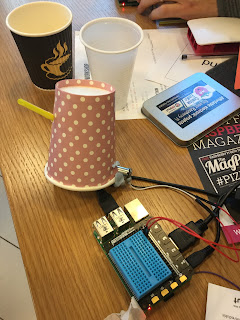

| figure 1 |

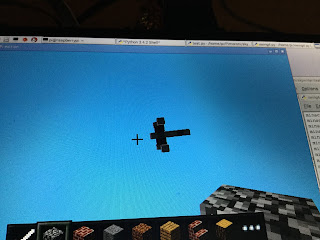

In this post the additional of Pirmoroni's Skywriter HAT included to allow movements of a hand to enable the X-Wing to take-off, land, move forward or backward.

It builds on ideas from the book Adventures in Minecraft

|

| figure 2 |

Before you start, to use the Skywriter, in the terminal you need to add curl -sSL get.pimoroni.com/skywriter | bash

To start with we just placed the X-Wing above the player by placing blocks in the shape (roughly) of the X-Wing based around the method MinecraftShape (see Chapter 8 of Adventures in Minecraft

|

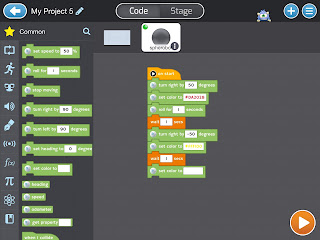

| figure 3 |

- Find the position of the player;

- To avoid building on top the player the starting position of the X-Wing is set by:

- add 5 to the x position of the player;

- add 10 to the y position of the player(The bit I have to keep reminding myself is the y-axis is vertical.);

- add 5 to the z position of the player;

- Using these values build using, Wool blocks, the X-Wing - 0 for white, and 14 for red blocks;

- If a flick starts at the top of the board (or "north") this moves the X-Wing down towards the ground;

- If a flick starts at the bottom of the board (or "south") this moves the X-Wing vertically up;

- If a flick starts on the right of the board (or "east") the X-Wing moves backwards horizontally;

- if a flick starts on the left of the board (or "west") the X-Wing moves forward.

from mcpi.minecraft import Minecraft

from mcpi import block

import mcpi.minecraftstuff as minecraftstuff

import time

import skywriter

import signal

mc=Minecraft.create()

xPos=mc.player.getTilePos()

xPos.x=xPos.x+5

xPos.y=xPos.y+5

xPos.z=xPos.z+5

xWingBlocks=[

minecraftstuff.ShapeBlock(0,0,0,block.WOOL.id,0),

minecraftstuff.ShapeBlock(-1,0,0,block.WOOL.id,0),

minecraftstuff.ShapeBlock(-2,0,0,block.WOOL.id,14),

minecraftstuff.ShapeBlock(-3,0,0,block.WOOL.id,0),

minecraftstuff.ShapeBlock(1,0,0,block.WOOL.id,0),

minecraftstuff.ShapeBlock(0,1,0,block.WOOL.id,0),

minecraftstuff.ShapeBlock(1,1,0,block.WOOL.id,0),

minecraftstuff.ShapeBlock(2,0,0,block.WOOL.id,0),

minecraftstuff.ShapeBlock(2,1,0,block.WOOL.id,0),

minecraftstuff.ShapeBlock(1,2,-1,block.WOOL.id,14),

minecraftstuff.ShapeBlock(1,2,1,block.WOOL.id,14),

minecraftstuff.ShapeBlock(1,-1,-1,block.WOOL.id,14),

minecraftstuff.ShapeBlock(1,-1,1,block.WOOL.id,14),

minecraftstuff.ShapeBlock(1,3,-2,block.WOOL.id,0),

minecraftstuff.ShapeBlock(1,3,2,block.WOOL.id,0),

minecraftstuff.ShapeBlock(1,-2,-2,block.WOOL.id,0),

minecraftstuff.ShapeBlock(1,-2,2,block.WOOL.id,0)]

xWingShape=minecraftstuff.MinecraftShape(mc,xPos,xWingBlocks)

@skywriter.flick()

def flick(start,finish):

if start=="south":

for count in range(1,10):

time.sleep(0.1)

xWingShape.moveBy(0,1,0)

if start=="west":

for count in range(1,10):

time.sleep(0.1)

xWingShape.moveBy(-1,0,0)

if start=="east":

for count in range(1,10):

time.sleep(0.1)

xWingShape.moveBy(1,0,0)

if start=="north":

for count in range(1,10):

time.sleep(0.1)

xWingShape.moveBy(0,-1,0)

signal.pause()

For more details on Minecraft and Python I would suggest going to http://www.stuffaboutcode.com/2013/11/coding-shapes-in-minecraft.html especially on how to download the software to implement MinecraftShape.

If you do use or modify please leave a comment, I would love to see what others do with it.

All views are those of the author and should not be seen as the views of any organisation the author is associated with.