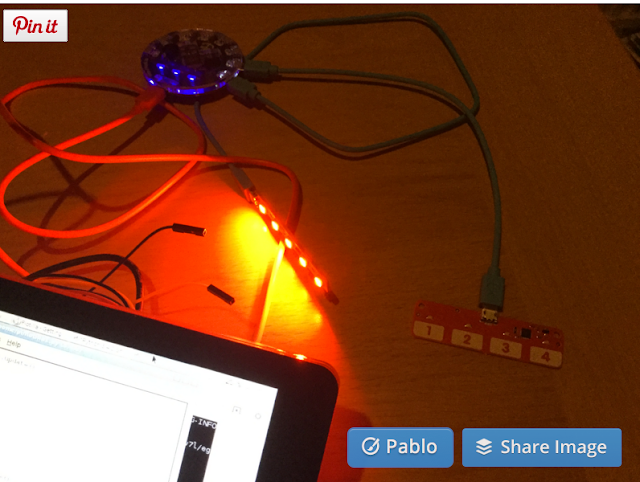

History was made on Saturday as the University of Northampton hosted Northamptonshire’s first-ever Raspberry Jam.

Raspberry Jams see those with an interest in the affordable – and tiny –Raspberry Pi computer get together to share knowledge, learn new things and meet other enthusiasts.

More than 30 people of all ages attended the county’s inaugural Jam at Avenue Campus, which was organised by the University’s Associate Professor in Computing and Immersive Technologies, Dr Scott Turner.

He said: “The Jam was a real success, with a wide mixture of people including fairly notable experts; those who have a Pi, but aren’t quite sure what to do with it and complete novices.

“It was great to see people who had some sort of Pi-related query have their questions answered, and others showing off what they have managed to get their Pi to do.

“It really helped to inspire the novices to get more involved in the Raspberry Pi, which will ultimately help them develop their coding skills.”

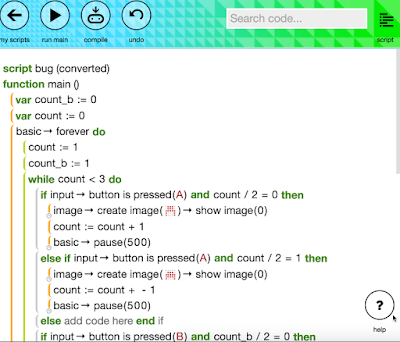

Computing and Science teacher Steve Foster, from Wollaston School, led a session on the popular Minecraft game, and was ably assisted by five of his Year 10 pupils.

One of the pupils, Ellie, said: “One of the groups had a problem with their coding and I managed to solve it for them. I love the challenges a Raspberry Pi can give you, and when you are able to solve the problem it’s really cool.”

The University is committed to making a positive social impact on the people of Northamptonshire and has set itself four ambitious challenges to meet by 2020.

One of these ‘Changemaker+ Challenges’ is to make Northamptonshire the best county in the UK for children and young people to flourish and learn – something the Raspberry Jam has contributed to.

All opinions in this blog are the Author's and should not in any way be seen as reflecting the views of any organisation the Author has any association with.